Evaluating Text-to-Image Synthesis: Survey and Taxonomy of Image Quality Metrics

https://arxiv.org/abs/2403.11821 2024

Abstract

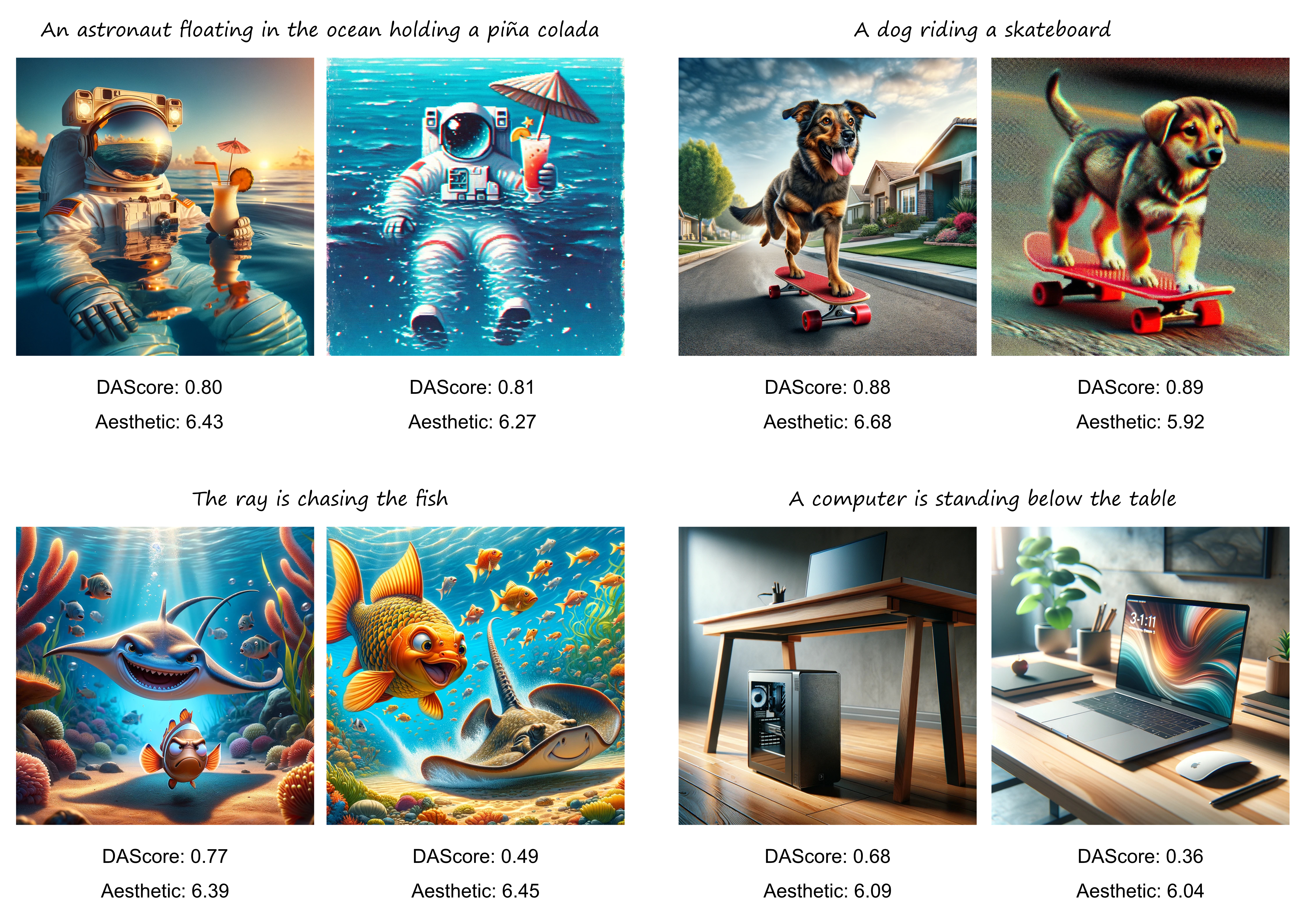

Recent advances in text-to-image synthesis enabled through a combination of language and vision foundation models have led to a proliferation of the tools available and an increased attention to the field. When conducting text-to-image synthesis, a central goal is to ensure that the content between text and image is aligned. As such, there exist numerous evaluation metrics that aim to mimic human judgement. However, it is often unclear which metric to use for evaluating text-to-image synthesis systems as their evaluation is highly nuanced. In this work, we provide a comprehensive overview of existing text-to-image evaluation metrics. Based on our findings, we propose a new taxonomy for categorizing these metrics. Our taxonomy is grounded in the assumption that there are two main quality criteria, namely compositionality and generality, which ideally map to human preferences. Ultimately, we derive guidelines for practitioners conducting text-to-image evaluation, discuss open challenges of evaluation mechanisms, and surface limitations of current metrics.

Bibtex

@preprint{hartwig2024survey,

title={Evaluating Text-to-Image Synthesis: Survey and Taxonomy of Image Quality Metrics},

author={Hartwig, Sebastian and Engel, Dominik and Sick, Leon and Kniesel, Hannah and Payer, Tristan and Poonam, Poonam and Gl{\"o}ckler, Michael and B{\"a}uerle, Alex and Ropinski, Timo},

year={2024},

journal={arxiv preprint arXiv:https://arxiv.org/abs/2403.11821}

}