Projects

AuCity3

The second stage of this cooperative research project addresses persuingly the central goals for teaching in higher education, reflecting the heterogeneity of students and fostering collaborative, inter-disciplinary co-operation and multi-disciplinary digital and social competences. The identified modalities of sucess will be integrated in a tranfer module to secur the transfer of knowledge to other teaching institutions and schools. The central goals are focussing on two research and development questions: adaptivity and collaboration.

Cooperation Partner(s):

- Prof. Dr. Tina Seufert (Ulm University)

- Prof. Dr. Enrico Rukzio (Ulm University)

- Prof. Dr. Steffi Zander (h2 Hochschule Magdeburg Stendhal)

- Prof. Dr-Ing. Christian Koch (Bauhaus-Universität Weimar)

- Univ.-Prof. Dr-Ing. Jörg Londong (Bauhaus-Universität Weimar)

Funding Source(s):

VIGATU (VIrtual GAstro TUtor)

Coloscopy for the prevention of colorectal carcinoma is one of the most frequently applied endoscopic medical examinations. Despite detailed and evidence based guidelines, the advanced education in endoscopy of physicians and non-medical staff is often based on learning by doing. A central, guideline based educational programme thus cannot be acertained. Simulator traing focussing on the trainee has proven effective. But this has not been adopted in general due to the high expenses linked to endoscopy simulators and the necessary instruction time to convey basic guideline knowledge. Virtual Reality (VR) allows for a more cost-efficient simulation of a complete endoscopic environment in a riskless setting. The VIGATU project aims at developping a VR based learning and teaching system to enable physicians and other medical personell to acquire knowledge and abilities to carry out guidline compliant endoscopic interventions. The PI on this project is Timo Ropinski.

Cooperation Partner(s):

- Adjunct Prof. Alexander Hann, MD (Würzburg University Hospital)

- Monika Engelke (Bildungswerk e.V., Herne)

- Prof. Dr Tina Seuffert (Ulm University)

- Benjamin Mühling (ThreeDee GmbH)

Funding Source(s):

RACOON

The RACOON infrastructure is based on a Germany-wide server system with network nodes at all German university hospitals (RACOON-NODES). The infrastructure architecture represents a combination of decentralized and centralized components (RACOON-CENTRAL) to form a powerful overall concept. This provides both the advantages of a computing environment distributed at the locations of all university hospitals and the efficiency of a secure central environment. Further advantages of our architecture are the fast and secure start of operation of the individual network nodes and a flexible expandability of the overall system by means of its autonomous software modules.

AKTISmart KI

This projects aims at analysing data of wearable sensors of motion detection by means of artificial intellgence. It identifies and analyses on the one hand certain patterns of movements in every day life (e.g. getting up from a chair) and on the other hand certain pathological, desease induced incidents (precipitation) in order to assess the patient’s general health or progression of a therapy. The technical tools are to be designed such that medical personnel as well as laymen can apply it with ease. The project simultaniously addresses questions linked to ethics, data security, and product liablity of medical equipment.. Pl on this project is Timo Ropinski.

Cooperation Partner(s):

- Prof. Dr Enrico Rukzio (Institute of Media Informatics, Ulm University)

- Prof. Dr Florian Steger (History, Philosophy and Ethics of Medicine, Ulm University)

- Prof. Dr Jochen Klenk (Robert-Bosch Gesellschaft für Medizinische Forschung mbH, Stuttgart)

- Prof. Dr. Alexandra Jorzig (IB-Hochschule Berlin)

Funding Source(s):

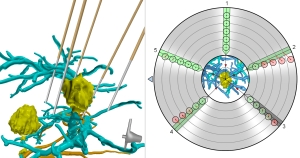

TRANSNAV

The project aims at developping a multimodal navigation system to assist surgeons in planning, execution, and documenting catheter interventions in arteries. It encompasses a countervailance for patient’s movements due to breathing and heart beat. The visualisation is thus enhanced by a deep learning application to deliver useful visual navitagion aids. It delivers 3D visualisations and can thus render visible the exact position of surgical tools and the patient’s organs. If applied, it helps at avoiding or reducing contrast agents usually necessary to established methods to visual intervention guiding tools. The project part 3D multimodal visualisation and documentation of cardiological image data is carried out under the lead of Timo Ropinski, Visual Computing Group.

Cooperation Partner(s):

- Mediri GmbH, Heidelberg

- Prof. Dr Volker Rasche (Medical Centre, Ulm University)

- 1000 shapes GmbH, Berlin

- Fraunhofer MEVIS, Bremen

Funding Source(s):

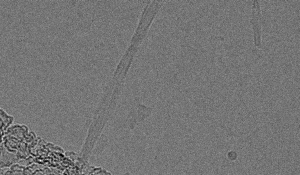

ABEM

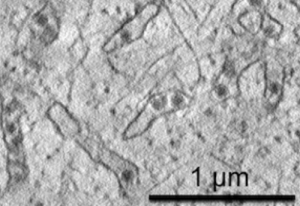

Within this project, attention-based deep learning techniques are developed to reduce the required amounts of annotated data in the analaysis of electron microscopy data, whereby the attention mechanism is supported by unsupervised denoising techniques and super-resolution approaches. As these techniques do not need any annotated data, they are applicable to tasks, where user annotations are nearly impossible to be acquired, e.g., detection of the 4D orientation of macromolecular structures. We will analyze our techniques by examining the outcomes when applied to images of Human betaherpesvirus 5/Human cytomegalovirus (HCMV) and Zika virus (ZIKV) virions in the cell. The PIs on this project are Timo Ropinski and Pedro Hermosilla from the Visual Computing Group, and Clarissa Read from the Central Facility for Electron Microscopy.

Cooperation Partner(s):

Funding Source(s):

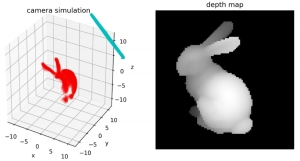

DREAM

Within this project, a novel 3D camera is developed, which exploits laser modulation for 3D imaging. At the visual computing group, we work on image synthesis algorithms to simulate this camera, in order to obtain training data to be used to emphasize the acquired images. We will further devise deep learning architectures for the 3D reconstruction process.

Cooperation Partner(s):

Funding Source(s):

AuCity2

The purpose of this project is to develop and analyze new mixed reality techniques for teaching in higher education. We work on the applicability of different mixed reality scenarios, learning analytics, and mixed reality authoring in collaboration with psychologists and engineers.

Cooperation Partner(s):

- Prof. Dr. Tina Seufert (Ulm University)

- Prof. Dr. Enrico Rukzio (Ulm University)

- Prof. Dr. Steffi Zander (Bauhaus-Universität Weimar)

Funding Source(s):

Collaborative Virtual Reality

In this project, a virtual collaboration lab for the analysis of scientific data is planned, designed, implemented and rolled out. The focus is on biomedical analysis, but also numerical simulation and design verification. In phase one of the project we will work on the conception and realization at the Universities Ulm and Stuttgart. For phase two, a roll-out to the Universities of Konstanz, Heidelberg and the Karlsruhe lnstitute for Technology is planned. Main goals are besides the generalizability, which is targeted by processing orthogonal application scenarios, usability, sustainability and scalability.

Cooperation Partner(s):

- Dr. Guido Reina (University of Stuttgart)

- Prof. Dr. Daniel Weiskopf (University of Stuttgart)

- Prof. Dr. Stefan Wesner (Ulm University)

Funding Source(s):

Visual Analysis of Protein-Ligand Interaction

The visual analysis of protein structures has been researched in several projects within the past few years. While molecular structures are relevant, it is necessary to focus on both interaction partners and to also take into account their physico-chemical properties in order to understand protein interactions. Thus, within this research project, we plan to enable the visual analysis of protein-ligand interactions as captured in state-of-the-art simulations by focusing on these properties. The main goal is to make these time-dependent data sets better accessible for protein designers, and help them to develop adaptions that enable a more efficient interaction. In particular we will develop novel visualization techniques which convey the relevant properties by means of abstract representations as well as structural embeddings. We will enhance these techniques for a visual comparison of different interactions, which eventually enable us to develop domain-centered immersive visual analytics approaches. We will evaluate our methods together with domain experts in order to ensure their effectiveness for the visual analysis of protein-ligand interactions.

Cooperation Partner(s):

- Dr. Michael Krone (University of Stuttgart)

- Assoc.-Prof. Barbora Kozlikova (Masaryk University, Czech Republic)

Funding Source(s):

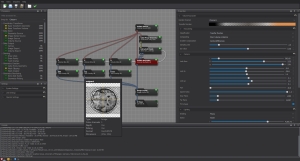

Interoperable Inviwo

Within this project, we plan to enable the interoperable extension of the Inviwo visualization software. The main pillar of our strategy is to lower the barrier for users and developers to create and distribute own Inviwo extensions. Therefore, we will ease the development of own functionalities and establish together with the Communication and Information Centre a system for distributing these functionalities to others. Thus, in contrast to modern source code version management systems, also non-programmers will be able to distribute their creations and thus directly extend the software. To fulfill the quality assurance requirements which emerge from such an approach, we will further realize and establish automated testing procedures.

Cooperation Partner(s):

Funding Source(s):

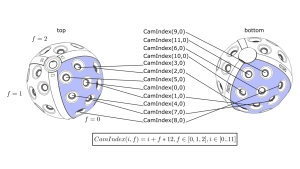

Reconstruction and Visualization of Virtual Reality Content

Together with Immersight we work on the reconstruction and visualization of indoor virtual reality scenes. The goal of the project is to use consumer camera systems to capture an indoor environment, which is subsequentially reconstructed to obtain a 3D model. To modify the model, intuitive interaction paradigms are developed, such that the user is able to sketch design alternatives based on the data acquired from the real world environment. By developing visualization techniques tailored for virtual reality, these design alternatives will become explorable in an immersive fashion.

Cooperation Partner(s):

Funding Source(s):

Neural Network Visualization in Biology

Together with the Institute of Protein Biochemistry we work towards visual interfaces facilitating life science domain experts to use deep neural networks for image classification in biology. While the latest progresse in deep learning makes this family of techniques valuable for many imaging-based research disciplines, domain experts often lack the required in-depth knowledge to use these techniques effectively. Within this project we focus on the reconstruction of microfibrils, whereby the goal is to develop a visual interface, that enables biologists to exploit deep neural networks for this task.

Cooperation Partner(s):

Funding Source(s):

Interactive Visualization of the Lion Man in a Digital Exhibition

The Lion Man is one of the oldest man-made works of art. The sculpture was carved about 40,000 years ago from mammoth ivory and was found just before the outbreak of the second world war in the Lonetal, at the foot of the Swabian Alb. Within this project we visualize digital scans of the lion man in close cooperation with the Ulmer Museum and in connection to the successful “Ice Age Art” application for Unesco World Cultural Heritage site. The aim of the project is the development of an interactive media station which primarily supports an interactive exploration of the lion man, but also the Lonetal as well as the Lonetal caves. Through the media station, the lion man and its place of discovery are interactively explorable through a touch screen. In this context, “comprehensible” 3D visualization is a central aspect, which allows the museum users for the first time to interact with the lion man in order to view it from all sides and at any magnification level. In addition, virtual cuts can be made through the sculpture to explore the inner structures. The direct touch interaction creates an unprecedented proximity to the sculpture, which today can only be viewed in a glass display case. The 3D visualizations are additionally enriched by textual and visual information to illuminate the origin and discovery history of the lion man.

Cooperation Partner(s):

Funding Source(s):

Multi-Touch Powerwall Visualization

With funding from the Carl Zeiss Stiftung, we are currently setting up our interactive visualization lab, which contains a high-resolution, multi-touch powerwall as its centerpiece. The powerwall, consisting of a Stewart StarGlas 60 Screen, has a screen size of 179in with a width of 3.88m and a height of 2.58m. Content is back projected with 24 full HD projectors, which generate a stereoscopic projection by means of polarization filters. To enable multi-user touch input, the powerwall is equipped with a DEFI X Series Multi-Touch Module, which enables us to track 10 individual touch events. Interactive 2D and 3D content is created through our high-end rendering cluster equipped with latest NVIDIA graphics hardware. The lab enables demos, multi-user collaborations as well as public outreach.

Funding Source(s):

Advanced Augmented Reality Based Visualization Techniques for Surgery

Within this project, an approach and system for image-guided laparoscopic liver surgery is developed. In a close cooperation with surgeons, engineers and computer scientists, all image-guiding aspects related to laparoscopy are in focus. Thus, the project covers research in navigation technology, medical image processing, interactive visualization as well as augmented reality. In our subproject, we specifically aim at multimodal medical visualization and multimodal data fusion. By incorporating pre- and intraoperative data, we are able to generate a fused data set, which is visualized interactively to support surgeons during liver interventions. The visualization is enhanced such that occlusion-free views to inner lesions become possible without compromising with respect to depth perception. All work is conducted in the context of an existing navigation system, and the final goal are clinical trials of a novel navigation system.

Cooperation Partner(s):

Funding Source(s):

Collaborative Visual Exploration and Presentation

Visualization plays a crucial role in many areas of e-Science. In this project, we aim at integrating visualization early on in the discovery process, in order to reduce data movement and computation times. Within many research projects, visualization is currently often established as the last step of a long pipeline of compute and data intensive processing stages. While the importance of this use of visualization is well known, facilitating visualization as a final step is not enough when dealing with e-Science applications. We address the challenges arising from the early collaborations enabled by these in-situ visualizations, and we investigate which role visualization plays to strengthen such collaborations, by enabling a more direct interaction between researchers.