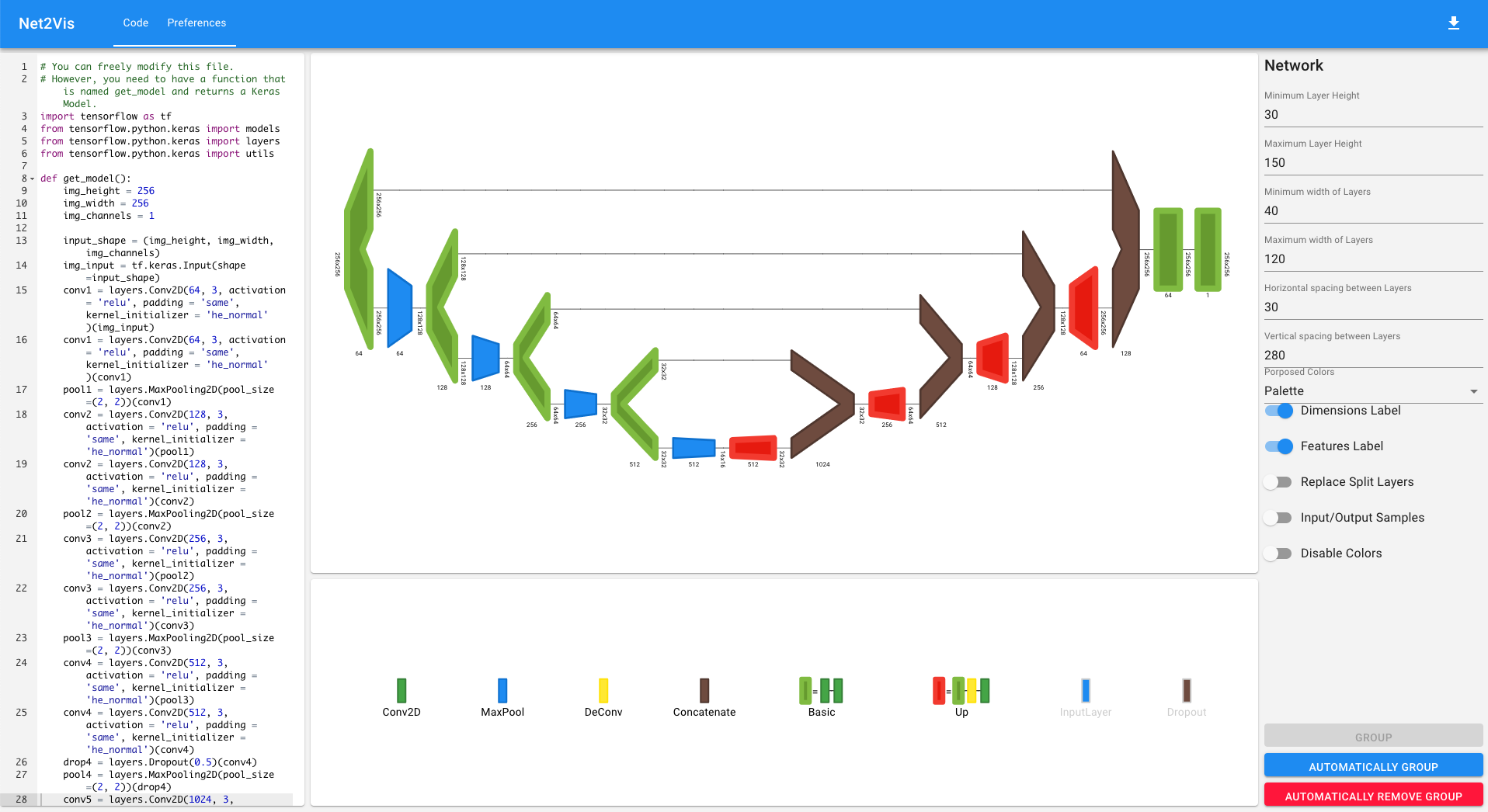

Net2Vis: Transforming Deep Convolutional Networks into Publication-Ready Visualizations

IEEE Transactions on Visualization and Computer Graphics 2021

Abstract

To convey neural network architectures in publications, appropriate visualizations are of great importance. While most current deep learning papers contain such visualizations, these are usually handcrafted just before publication, which results in a lack of a common visual grammar, significant time investment, errors, and ambiguities. Current automatic network visualization tools focus on debugging the network itself, and are not ideal for generating publication-ready visualizations. Therefore, we present an approach to automate this process by translating network architectures specified in Python into visualizations that can directly be embedded into any publication. Our carefully crafted visual encodings incorporate abstraction through layer accumulation, as it is often used to reduce the complexity of the network architecture to be communicated. Thus, our approach, which we evaluated through expert feedback, and a quantitative study, not only reduces the time needed to generate publication-ready network visualizations, but also enables a unified and unambiguous visualization design.

Bibtex

@article{baeuerle21net2vis,

title={Net2Vis: Transforming Deep Convolutional Networks into Publication-Ready Visualizations},

author={B{\"a}uerle, Alex and van Onzenoodt, Christian and Ropinski, Timo},

year={2021},

journal={IEEE Transactions on Visualization and Computer Graphics},

volume={27},

pages={2980--2991},

issue={6},

doi={10.1109/TVCG.2021.3057483}

}