Variance-Aware Weight Initialization for Point Convolutional Neural Networks

European Conference on Computer Vision 2022

Abstract

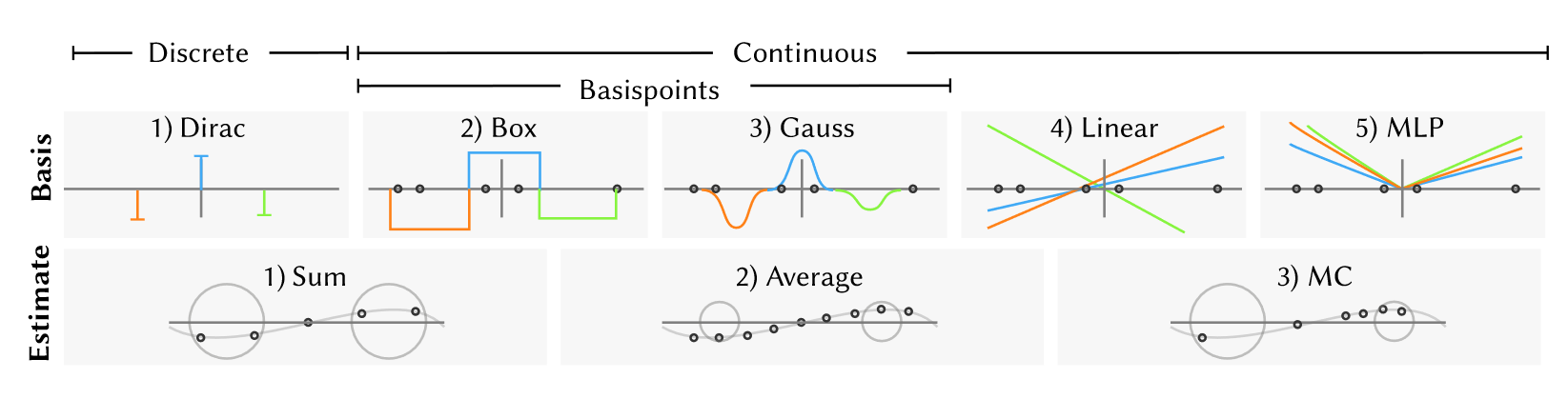

Appropriate weight initialization has been of key importance to successfully train neural networks. Recently, batch normalization has diminished the role of weight initialization by simply normalizing each layer based on batch statistics. Unfortunately, batch normalization has several drawbacks when applied to small batch sizes, as they are required to cope with memory limitations when learning on point clouds. While well-founded weight initialization strategies can render batch normalization unnecessary and thus avoid these drawbacks, no such approaches have been proposed for point convolutional networks. To fill this gap, we propose a framework to unify the multitude of continuous convolutions. This enables our main contribution, variance-aware weight initialization. We show that this initialization can avoid batch normalization while achieving similar and, in some cases, better performance.

Bibtex

@inproceedings{hermosilla2020variance-aware,

title={Variance-Aware Weight Initialization for Point Convolutional Neural Networks},

author={Hermosilla, Pedro and Schelling, Michael and Ritschel, Tobias and Ropinski, Timo},

booktitle={Proceedings of European Conference on Computer Vision}

year={2022}

}