UNITE - Unified Semantic Transformer for 3D Scene Understanding

2512.14364 2025

Abstract

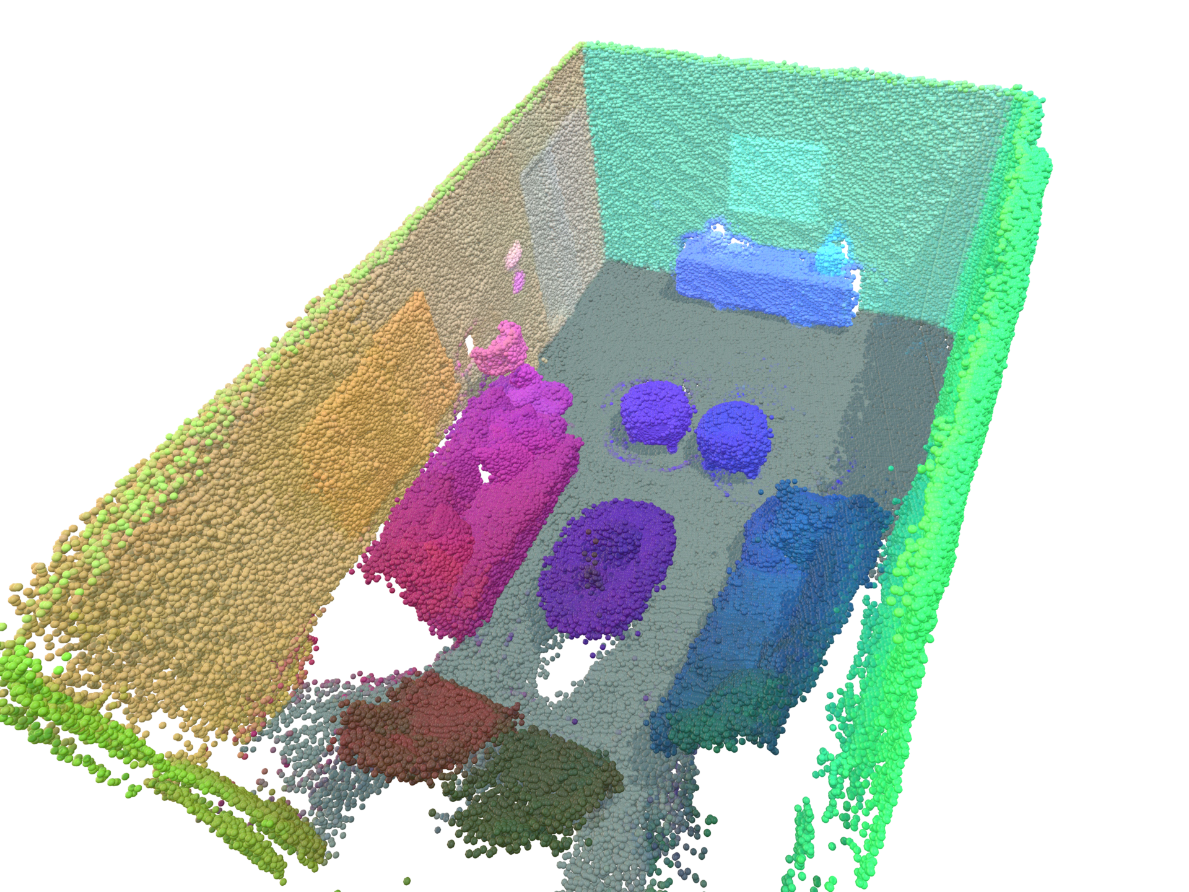

Holistic 3D scene understanding requires capturing and reasoning about complex, unstructured environments. Most existing approaches are tailored to a single downstream task, which makes it difficult to reuse representations across objectives. We introduce UNITE, a unified semantic transformer for 3D scene understanding: a feed-forward model that consolidates a diverse set of 3D semantic predictions within a single network. Given only RGB images, UNITE infers a 3D point-based reconstruction with dense features and jointly predicts semantic segmentation, instance-aware embeddings, open-vocabulary descriptors, and signals related to affordances and articulation. Training combines 2D distillation from foundation models with self-supervised multi-view constraints that promote 3D-consistent features across views. Across multiple benchmarks, UNITE matches or exceeds task-specific baselines and remains competitive even against methods that rely on ground-truth 3D geometry.

BibTeX

@preprint{koch2025unite,

title={UNITE - Unified Semantic Transformer for 3D Scene Understanding},

author={Koch, Sebastian and Wald, Johanna and Matsuki, Hidenobu and Hermosilla, Pedro and Ropinski, Timo and Tombari, Federico},

year={2025},

journal={arxiv preprint arXiv:2512.14364}

}