From Natural to Nanoscale: Training ControlNet on Scarce FIB-SEM Data for Augmenting Semantic Segmentation Data

International Conference on Computer Vision Workshop 2025

Abstract

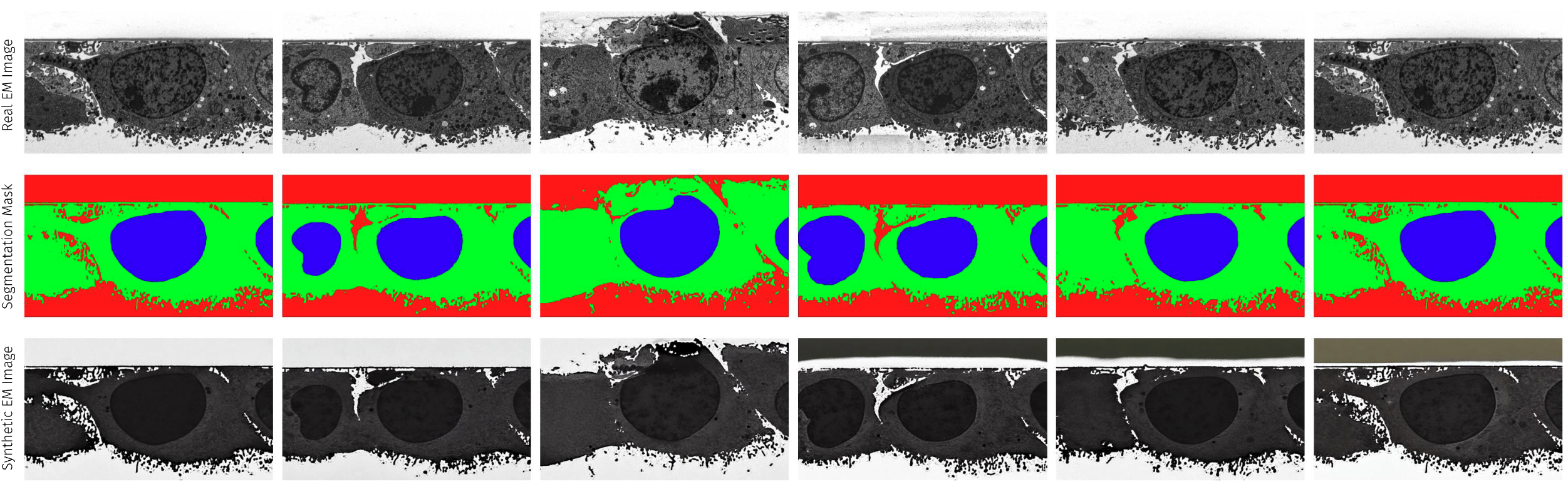

Focused Ion Beam Scanning Electron Microscopy (FIB-SEM) is widely used for ultrastructural imaging, with segmentation of FIB-SEM stacks being essential for downstream quantification and biological analysis. However, manual annotation of these datasets is labor-intensive and time-consuming. Training semantic segmentation models offers a scalable alternative, but FIB-SEM datasets are typically small and exhibit low variance as the imaging process involves slicing and capturing individual sample sections. This poses a significant challenge for model training. Data augmentation via generative models has emerged to address limited data, with diffusion models showing state-of-the-art synthesis capabilities. Yet, their dependence on large natural image datasets restricts direct application to FIB-SEM data. In this work, we explore fine-tuning ControlNet -- a conditional diffusion model extension -- on small FIB-SEM datasets to produce realistic, label-consistent synthetic images for segmentation. Despite relying on a diffusion backbone trained exclusively on natural images, we show that fine-tuning ControlNet with domain-specific structural cues enables effective data augmentation, leading to an impressive downstream mIoU improvement of up to $+15.4$. We compare ControlNet augmentations against standard augmentation techniques in respect to generation time as well as downstream task performance. We additionally explore different dataset sizes, and provide insights into the feasibility of applying large-scale generative models in data-scarce, low-variance scientific imaging domains like FIB-SEM.

BibTeX

@inproceedings{kniesel2024from,

title={From Natural to Nanoscale: Training ControlNet on Scarce FIB-SEM Data for Augmenting Semantic Segmentation Data},

author={Kniesel, Hannah and Rapp, Pascal and Hermosilla, Pedro and Ropinski, Timo},

booktitle={Proceedings of International Conference on Computer Vision Workshop}

year={2025}

}