Enabling Viewpoint Learning through Dynamic Label Generation

Computer Graphics Forum (Proc. of Annual Conference of the European Association for Computer Graphics) 2021

Abstract

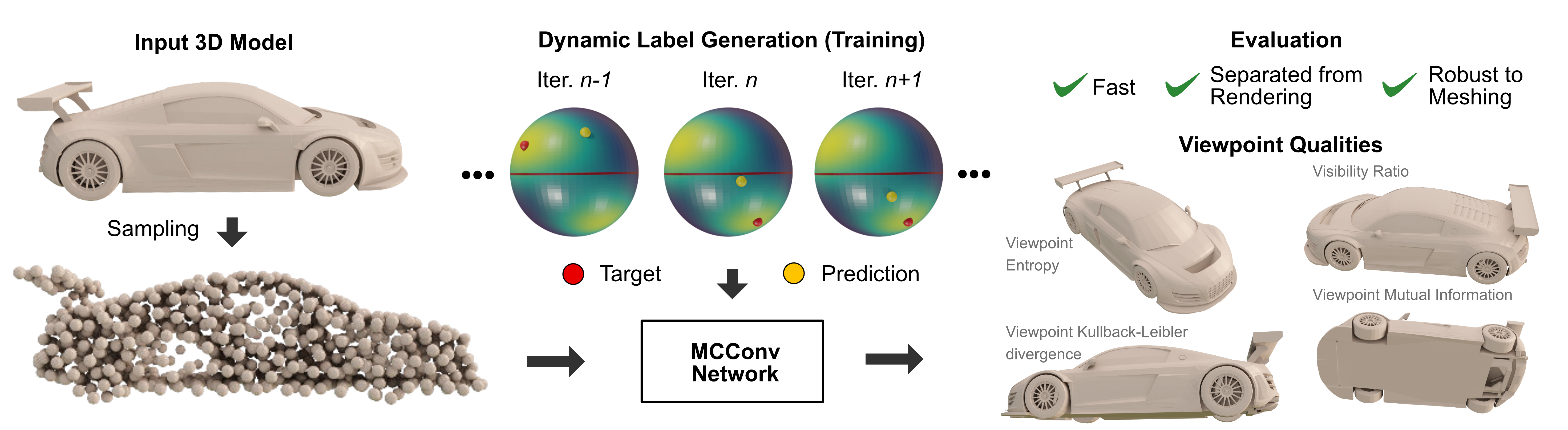

Optimal viewpoint prediction is an essential task in many computer graphicsapplications. Unfortunately, common viewpoint qualities suffer from majordrawbacks: dependency on clean surface meshes, which are not alwaysavailable, insensitivity to upright orientation, and the lack of closed-formexpressions, which requires a costly sampling process involving rendering.We overcome these limitations through a 3D deep learning approach, whichsolely exploits vertex coordinate information to predict optimal viewpointsunder upright orientation, while reflecting both informational content andhuman preference analysis. To enable this approach we propose a dynamiclabel generation strategy, which resolves inherent label ambiguities dur-ing training. In contrast to previous viewpoint prediction methods, whichevaluate many rendered views, we directly learn on the 3D mesh, and arethus independent from rendering. Furthermore, by exploiting unstructuredlearning, we are independent of mesh discretization. We show how the pro-posed technology enables learned prediction from model to viewpoints fordifferent object categories and viewpoint qualities. Additionally, we showthat prediction times are reduced from several minutes to a fraction of asecond, as compared to viewpoint quality evaluation. We will release thecode and training data, which will to our knowledge be the biggest viewpointquality dataset available.

BibTeX

@article{schelling2020enabling,

title={Enabling Viewpoint Learning through Dynamic Label Generation},

author={Schelling, Michael and Hermosilla, Pedro and V{\'a}zquez, Pere-Pau and Ropinski, Timo},

year={2021},

journal={Computer Graphics Forum (Proc. of Annual Conference of the European Association for Computer Graphics (2021))},

volume={40},

pages={413--423},

issue={2},

doi={10.1111/cgf.142643}

}